Runtime Governance for Enterprise AI

AI agents and vendor-hosted AI systems can act autonomously, call tools, and move data across enterprise boundaries. Most organizations have principles and oversight processes, but no technical control point once AI is deployed.

Runtime enforcement

Govern AI at execution time

Vendor governance

Control third-party AI systems

Immutable audit trail

Complete compliance records

The Problem

AI governance today is mostly advisory.

Enterprises face growing risk from:

- Internal AI agents acting without clear execution boundaries

- Vendor-hosted AI systems outside direct control

- No unified way to enforce which models, tools, or services AI can access

- Incomplete auditability when legal, security, or regulators ask what happened

Policies exist. Enforcement does not.

Control AI at Runtime

Enforce policy, access, and auditability at execution time.

Govern every agent action before it reaches models, tools, or vendors.

With Kavora, organizations can:

- Control model and tool access

- Enforce policy across agents and vendors

- Change behavior without redeploys

- Capture an immutable audit trail

All AI execution passes through Kavora first.

Kavora treats AI like production infrastructure, not an experiment.

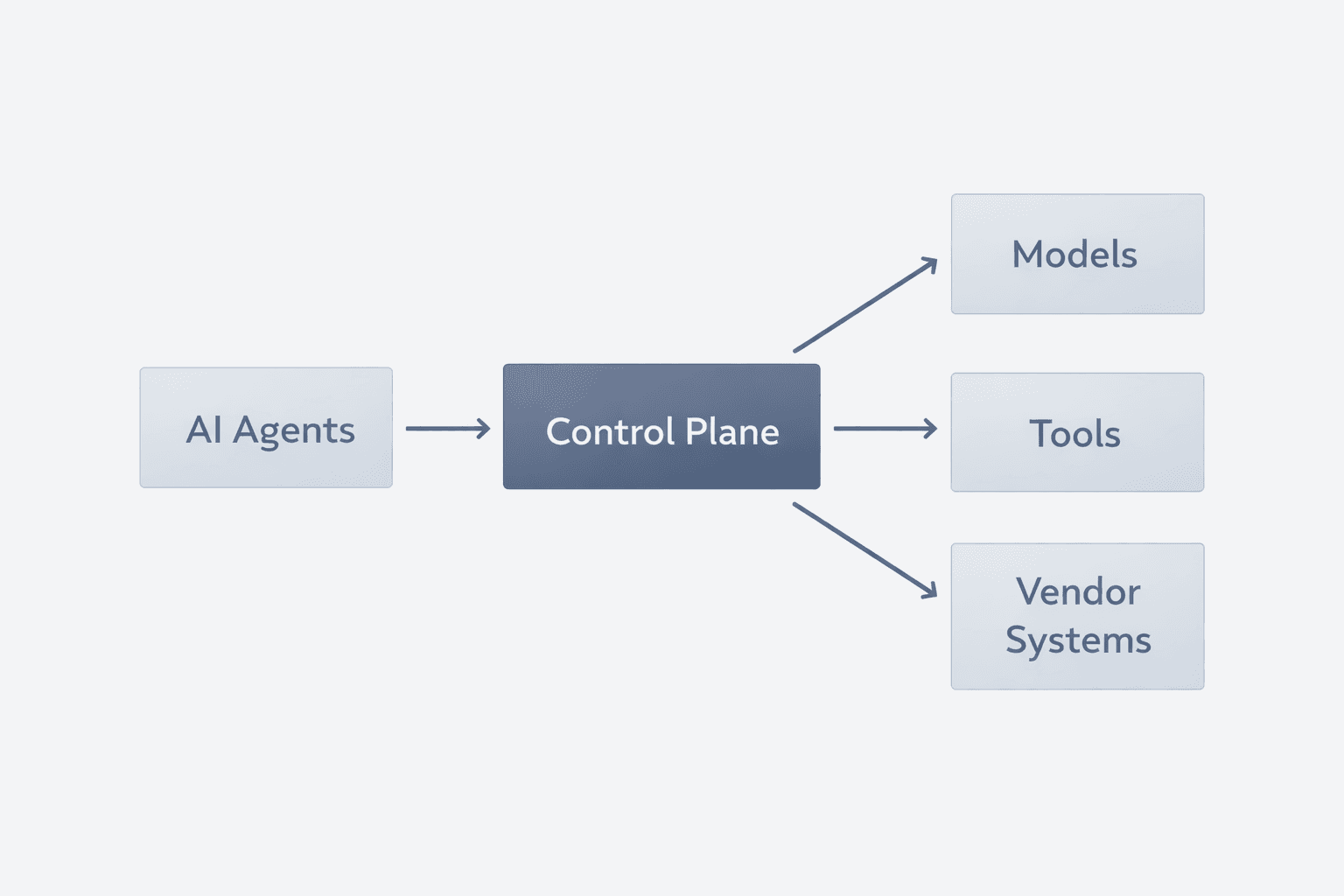

How It Works

(Conceptually)

Kavora inserts a governed control plane between AI systems and the resources they interact with.

Controlled boundary

AI agents operate within a controlled execution boundary

Policy mediation

Outbound calls to models, tools, and services are mediated by policy

Audit + investigation

All activity is logged for audit, investigation, and compliance

Unauthorized actions are blocked or safely degraded

This approach mirrors how network security evolved from guidelines to firewalls.

Why This Matters Now

AI systems are moving from decision support to autonomous execution.

That shift creates new enterprise risk:

- Execution without approval

- Data movement without visibility

- Vendor AI without enforceable constraints

Organizations need governance that works after deployment, not just before.

Who Kavora Is For

Our Philosophy

- Governance should be enforceable, not aspirational

- Runtime control beats static review

- Visibility comes before deep inspection

- Enterprises should retain control without slowing innovation

Kavora is designed to start lightweight and scale enforcement as trust and requirements mature.

Team

Built by operators who've lived both security infrastructure and real-world AI deployment.

Christian is a systems and security-focused technologist with three decades of experience building and operating servers, services, and applications across and beyond the Microsoft ecosystem. He founded ShwaTech LLC in New York City in 2024 to expand the reach of his technical expertise and to create opportunity for aspiring engineers in Hunza, northern Pakistan. Christian leads Kavora's architecture and enforcement-layer design.

His work is shaped by time spent in northern Pakistan, where he saw world-class talent emerging in highly remote conditions.

Faizan is a product and go-to-market founder with a background in equity research and building AI-native SaaS products. He founded EchoSync AI, an AI-powered review marketing platform acquired in 2024, and previously led fundraising and growth at Eucalyptus Labs, scaling from zero to over 500,000 active devices. With five years of equity research experience at Seeking Alpha, he brings analytical rigor to enterprise customer discovery. At Kavora, he leads GTM and product strategy.

His approach to product-market fit is informed by years of analyzing how markets validate enterprise software adoption cycles.

Status

Kavora is currently working with early enterprise design partners.

We are validating runtime governance approaches across:

Active Development

- Internal AI agents

- Vendor-hosted AI systems

- Secure enterprise environments

Interested in governing AI at runtime?

We are scheduling conversations with enterprise leaders exploring AI governance, security, and responsible deployment.